AI: Transformative vs Evolutional

In the world of artificial intelligence (AI), including machine learning (ML), large language models like GPT, and image generation models such as SD-XL/Dall-E, we’ve witnessed a remarkable evolution. What was once the stuff of science fiction has become an integral part of our daily lives, affecting various aspects of society.

The question that arises is whether AI represents a truly transformative force capable of reshaping entire industries and societal structures, or if it’s primarily an evolutionary process, gradually enhancing existing systems. Perhaps it’s a blend of both.

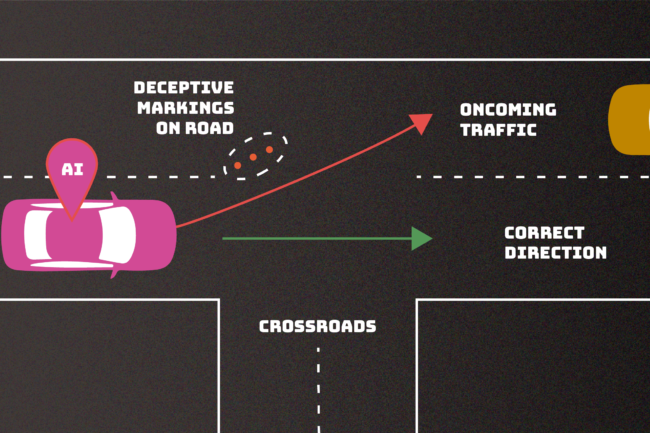

Given this perspective, the practical application of generative AI at an enterprise level still needs to be proven. Issues like the propensity to provide inaccurate information and the ability to generate plausible completely false answers are problematic in an enterprise-level environment. You can’t have your key mechanisms driving economies and productivity and profit tripping out when it gets a bug.

My take is enterprise-level AI will not happen at scale in 2024, and I don’t expect to see real bottom-line impact until Q1 25 as it gradually overcomes current practical challenges to provide real utility at enterprise scale.

Make no mistake, even with my “it’s going to take-a-second” lens, valuable use cases will emerge and make a big impact, disrupting industries and creating unforeseen opportunities.

Right now, examples fall across call centers, protein folding insights, balancing load in production, energy, traffic, and streaming, optimizing marketing campaigns, medical differentials. These are steps towards a transformative shift, and true application is the likely outcome.

Feature or Glitch

Inaccuracies in language models are fine when you are creating cool visuals, not so much in spreadsheets that bottom lines are based on. The glitch is the feature (again).

A great example of this phenomenon can be seen in the emergence of snowy static on televisions when no signal was clear, and VHS tapes ended became a key effect across movies in video programming over the next two decades.

With that thinking in hand, let’s look at the media landscape and multi-modal future. These media-to-media models generate vector graphics, video, audio, and text into a tapestry of creative possibilities. The “glitch” becomes a creative brushstroke, allowing for the crafting of immersive experiences, artistic expressions, and content frankly unimagined at this point.

The barrier between producer and viewer or consumer disappeared with TikTok and social media. The tooling to create these ‘environments’ allows creators to shape (not edit!) media is a key emergent capability. The string of companies tackling this has grown from a few to a dozen in the last six months.

Media: Scale and Value

In the same timeline, scale becomes the enemy of traditional media, having more legacy assets downstream from M&A becomes a secular problem. This linear networks math regarding scale is dead; the value of cable networks over the past 30 years has seen a trajectory of rapid growth, peak, and then a gradual decline.

Ad revenue coming from network and local affiliates and amassing a large channel lineup does not increase value as it once did, although it’s still a strong business.

There is a larger tale to be told here about why in the world did companies that were the producers of content think it’s a good idea to become a player and service? Inheriting all that goes with it, a big bag of hurt and expenses to attract viewers, maintain the platform and on and on, my head hurts. All delivered through a wire when they had ‘cable’ + OTA. Sirens call to rock, and shiny-object-syndrome terms come to mind here.

There does not need to be the current, checks notebook, somewhere between 150 to 200 solely video-focused SVOD services (US). The economic burden of owning both parts of the vertical is significant and not tenable for most big players. The number is likely to be under 5 and more likely, less than 3.

Disney may be the only player that has made a successful transition from legacy to digital. TL/DR Discounted M&A ahead, less production budgets, fewer shows, less chances for hit franchises or sticking with shows until they become hits.

So the familiar B&C scale has collapsed with the rise of digital media, streaming services, and personalized content, aka TikTok, YouTube. The relationship between channels and viewers has become more complex, and the value of each legacy channel has diminished.

At the same time, the gap between producer and viewer has also collapsed, as evidenced by Insta + TikTok creator economies. These producers are about to get some new tools.

The Year Ahead

I fully expect one AI breakout of 2024 will be a visual-forward AI offering. A program, show, channel, a persona, a step towards the Max Headroom media future we all need. The scale and costs to do this will help define the space.

The economics will not be legacy; the audience, not weaned on legacy media but social media, makes this transition feel natural to them. The scale happens when display is solved for in a creative manner, providing an organizing principle that can move the whole space further. That will take a keen curatorial eye and a lot less financial resources to advance.

While there are a dozen players making tooling, the best of them, a small handful, are still far away from meaningful utility, but that will happen. It’s always the worst it will be with AI as it continues to evolve. Right now I can’t even look at synthetic output, which has settled into a style that’s not satisfying. That will change, and with that, so will everything.